Abo ut

What matters is giving simulation and test results a context

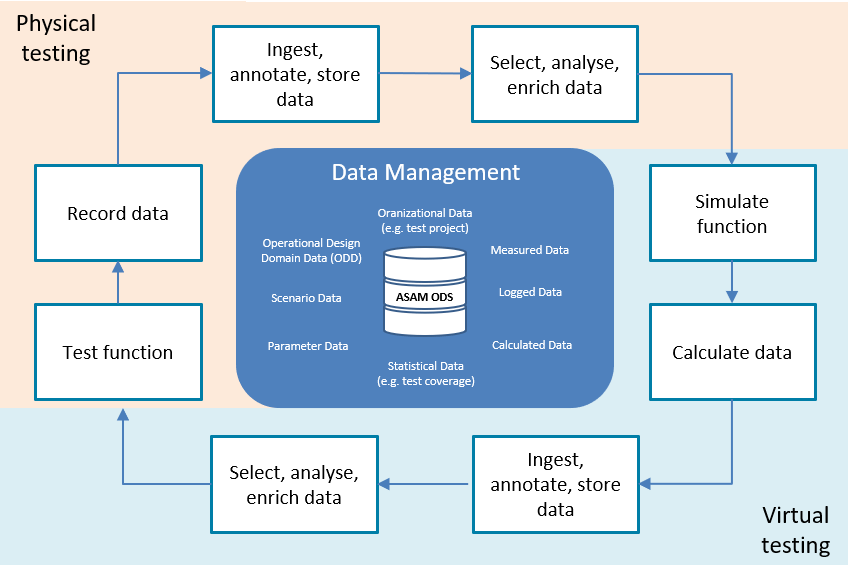

The comprehensive documentation of results obtained during the verification and validation of autonomous driving functions is becoming an increasing challenge for vehicle manufacturers and suppliers. File Directories and proprietary data silos based on vendor-specific tools cannot be a solution in the long-term. Required is a standardized platform for test data management that can help to efficiently cope with the manifold proof obligations emerging due to increasing safety and legal regulations. ASAM ODS is a proven standard that can be used to implement such a platform.

The underlying data model of ASAM ODS is flexible enough to describe the organizational and technical context in which individual results have been measured, logged or calculated. For example, the characteristics of a driving scenario (consisting of “maneuvers”, “objects” and “actions”) on which the results of a test drive or simulation are based can be comprehensively documented using appropriate attributes. The same applies for the features of the sensors or sensor models used in a test or simulation, including their exact positioning on the test object or digital twin. ASAM ODS allows to define and store almost any meta data descriptions. In this way, different types of test and simulation results can be found, interpreted, compared, compiled, shared and evaluated at any time.

The data actually measured, logged or calculated are either imported into ASAM ODS’ own mass data format (MDF) or stored based on their own file formats and linked to the metadata. The latter is also possible with descriptive additional information, that is for example stored in openCRG, openDRIVE, openLABEL, openODD and openSCENARIO files.

The well thought-out HTTP interface of ASAM ODS ensures that different applications can access the meta data in a standardized way and correctly interpret the meaning of the associated result data while querying them from the data base.

It should be clear by now, that storing large amounts of measured, logged or calculated test data requires more than a powerful technical infrastructure. What matters is giving meaning to the data, independent of vendor-specific tools. This is the decisive prerequisite for being able to share and evaluate test and simulation data between teams, locations and authorities in a meaningful way. At the same time, it’s reassuring to know that the conceptual approach of ASAM ODS can also be transferred to big data and cloud applications.